Motivating example#

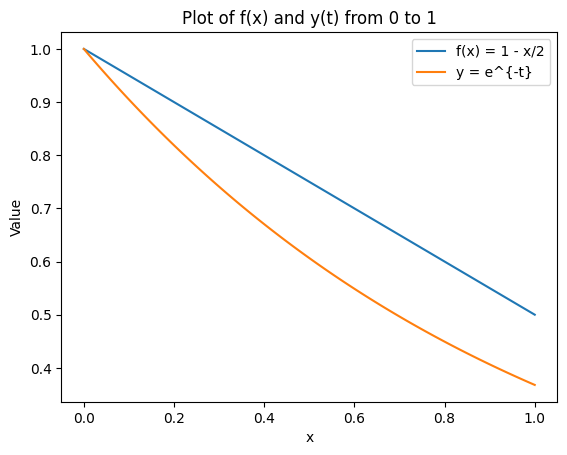

Consider an ODE \( y^\prime = -y \quad y(0) = 1\), which has an analytical answer \(y = e^{-t}\).

We wish to approximate this function numerically between \([0,1]\) with a line defined by the boundary points, \(f(0) = a = 1\) and \(f(1) = b\), $\(f(x) = [1-x] + x b\)$

How do we find \(b\)?

Option 1: Direct substitution#

Following the Finite Difference method, we can subsitute \(f(1)\) into the ODE and solve for b:

and the approximate solution is \(f = 1 -\frac{x}{2}\)

import numpy as np

import matplotlib.pyplot as plt

# Define the functions

f = lambda x: 1 - x / 2

y = lambda t: np.exp(-t)

# Generate values

x = np.linspace(0, 1, 100)

f_values = f(x)

y_values = y(x)

# Plot the functions

plt.plot(x, f_values, label='f(x) = 1 - x/2')

plt.plot(x, y_values, label='y = e^{-t}')

plt.xlabel('x')

plt.ylabel('Value')

plt.legend()

plt.title('Plot of f(x) and y(t) from 0 to 1')

plt.show()

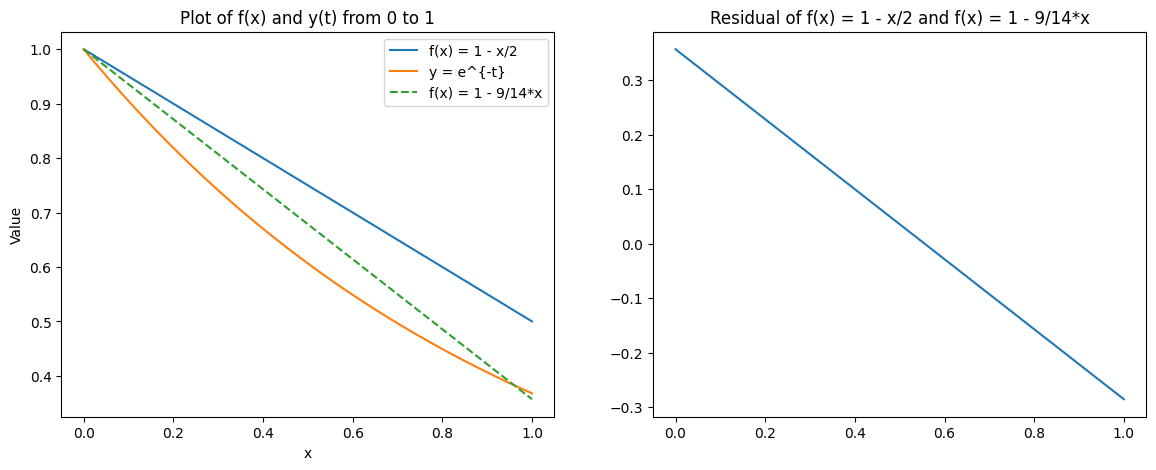

Option 2: Least square minimization of the residual#

Let’s integrate the squared residual of f(x) plugged into the ODE, \(y^\prime + y\)

Note that in the end the integral of the residual, \(F\) is a parabolic function of the parameter \(b\). The minimum is found at: \(\frac{\partial F}{\partial b}(b) = 0\), for \(b = \frac{5}{14}\). The approximate solution is \(f(x) = 1-\frac{9}{14}x\).

# Define the new function

fig, axs = plt.subplots(1, 2, figsize=(14, 5))

f_new = lambda x: 1 - 9./14*x

f_new_values = f_new(x)

# Plot the functions

axs[0].plot(x, f_values, label='f(x) = 1 - x/2')

axs[0].plot(x, y_values, label='y = e^{-t}')

axs[0].plot(x, f_new_values, label='f(x) = 1 - 9/14*x', linestyle='--')

axs[0].set_xlabel('x')

axs[0].set_ylabel('Value')

axs[0].legend()

axs[0].set_title('Plot of f(x) and y(t) from 0 to 1')

# Plot the residual

residual = lambda x: -9/14 + 1 - 9./14*x

axs[1].plot(x, residual(x))

axs[1].set_title('Residual of f(x) = 1 - x/2 and f(x) = 1 - 9/14*x')

plt.show()

We see that option 2 does a better job of approximating the function over the full domain since it focusses on the integral of the residual.

You may have noticed that we did something funny here: we integrated \(R^2\) and then took its derivative with respect to \(b\) in order to find the minimum of the integral! I.e.:

where the residual has now been weighted by the function \(v\). This is called the Method of Weighted Residual [MWR]. Let’s try a different function and see what happens.

Option 3: Integrate the MWR to find the parameters#

Integrate the weighted residual function directly with a convenient choice of \(v\).

lets take \(v = x\) (to be motivated later) and integrate:

which is very close to our previous solution \(b = \frac{5}{14}\) but with a simpler integration. BUT, we are still let with analytically integrating! If only there were a way to express integrals as the sum of the integrand evaluated at certain points…

Option 4: Express the MWR integral as the sum of the integrand evaluated at certain points.#

Integrate the weighted residual function directly with a convenient choice of \(v\) as the sum of the integrand evaluated at certain points:

Recall Gaussian Quadrature allows us to evaluate an integral by summing the integrand at the Gauss Points. The Gauss Points for the domain \([-1,1]\) are \(\pm\frac{1}{\sqrt{3}}\), scaled to this integral domain become,

and

Recap of the approach#

Let’s recap what we’ve done:

parameterized a function as a weighted sum of simpler functions (a linear basis)

found a (simple) integral expression that minimizes the error in the approxiation (Minimized Weighted Residual)

performed the integration exactly using only function evaluations into a linear system (Gaussian quadrature)

Solved the linear system (I told you everything boiled down to linear systems!)

Practical solution of linear systems requires sparsity! Let’s formalize our proceedure and see how we can ensure sparsity.